Govt Abstract

The mixing of Synthetic Intelligence (AI) into psychological healthcare presents a panorama of each unprecedented alternative and appreciable complexity. This report examines the twin nature of AI’s function: its immense potential for increasing entry to care and personalizing remedy, juxtaposed with important challenges associated to the very idea of synthetic empathy, profound moral issues, and sensible implementation hurdles. The evaluation underscores a essential crucial for accountable growth, sturdy regulatory frameworks, and a collaborative human-AI mannequin to make sure that these applied sciences yield useful outcomes for people and society. The report highlights that AI is rising not merely as an incremental enchancment however as a crucial, albeit dangerous, intervention in a healthcare system struggling to satisfy escalating demand.

1. Introduction: Navigating the Intersection of AI, Empathy, and Psychological Well being

The worldwide psychological well being panorama faces an pressing and pervasive disaster. Obstacles similar to persistent stigma, restricted entry to skilled care, and important shortages of psychological well being professionals contribute to growing wait instances and unmet wants worldwide.1 On this context, Synthetic Intelligence is quickly rising as a transformative know-how, poised to revolutionize psychological well being help from preliminary prognosis to personalised remedy and enhanced accessibility.1 The promise of AI lies in its capability to supply scalable, cost-effective options that may overcome many of those deep-seated structural deficiencies in conventional healthcare fashions.1 This positions AI not simply as a useful device, however as a crucial intervention in a system below immense pressure, underscoring the urgency and excessive stakes concerned in its accountable growth and deployment.

Nonetheless, the mixing of AI into such a delicate area instantly brings to the forefront a novel and complicated problem: empathy. Empathy, a cornerstone of human therapeutic relationships, entails profound understanding and connection, qualities historically thought of unique to human interplay. This report frames the following dialogue round dissecting how AI makes an attempt to simulate this important human trait, the successes and limitations encountered, and the profound moral and sensible implications for psychological healthcare.

2. Defining Synthetic Empathy: Theoretical Frameworks and Parts

Synthetic empathy, often known as computational empathy, refers back to the growth of AI techniques—similar to companion robots or digital brokers—that may detect feelings and reply to them in an empathic method.6 At its core, this entails non-human fashions predicting a person’s inside state (e.g., cognitive, affective, bodily) based mostly on alerts they emit, similar to facial expressions, voice intonations, or gestures. It additionally extends to predicting an individual’s response to particular stimuli.6 A broader understanding of synthetic empathy emphasizes creating know-how that’s delicate and aware of human feelings, transferring past mere job completion to a extra real type of understanding.7

A essential distinction inside the idea of empathy, significantly when utilized to AI, is between its cognitive and affective parts.

- Cognitive Empathy: This refers back to the psychological or mental side of empathy—the power to actively establish and perceive cues, permitting one to mentally put themselves in one other particular person’s place.7 AI techniques can simulate this by processing emotional enter, making applicable inferences, and producing useful responses, even with out possessing subjective feeling.7

- Affective Empathy: That is the emotional or feeling a part of empathy—the capability to share or mirror one other particular person’s emotions.7 Whereas AI can simulate this part, consultants contend that it can not actually replicate real subjective feeling.7

This distinction highlights a elementary philosophical debate: the functionalist view versus the phenomenological view. Functionalism means that if an AI system features empathetically—which means it processes emotional enter, makes applicable inferences, and generates useful responses—then it may be thought of to exhibit a type of empathy, regardless of subjective feeling.7 This attitude contrasts sharply with phenomenological views, which emphasize the indispensable function of subjective expertise and qualitative feeling in real empathy.7 The sensible implications of this definitional ambiguity are profound. If the functionalist view is accepted, the main focus shifts to designing AI that behaves empathetically and is perceived as such by customers.7 This method might simplify the event course of by not requiring the creation of consciousness, however it concurrently escalates moral issues concerning transparency and the potential for consumer deception or over-attachment. The paradox straight influences how AI empathy is examined, how regulatory frameworks are designed, and finally, how society trusts and interacts with these techniques.

Computational fashions of empathy goal to operationalize these ideas. The Notion Motion Mannequin (PAM), for example, posits that perceiving one other particular person’s emotional state robotically triggers corresponding representations inside the observer’s neural and bodily techniques, forming a organic basis for empathy.10 Constructing upon this, the Empathy Simulation System (ESS) is a computational framework designed to emulate key parts of human empathy. The ESS processes environmental inputs—similar to facial expressions, physique posture, vocal intonations, and situational context—to deduce perceived feelings.10 This info then strikes to an Empathy Appraisal stage, the place it’s built-in with situational understanding to formulate an inside response. Lastly, by Empathy Response Processing and an Empathy Response Module, the system generates contextually applicable and emotionally supportive responses.10 Massive Language Fashions (LLMs) are leveraged to course of nuanced info and generate responses which can be perceived as empathic.10

Nonetheless, the very success of AI in simulating empathy by such techniques, which frequently function as “black packing containers” the place decision-making processes should not simply interpretable 11, creates a deeper problem. Customers might understand AI as empathetic 9, however this notion relies solely on outward conduct, not on a shared inside state or real understanding. This will result in a major belief deficit 2 if customers uncover the simulation is just not “real” or if responses are misaligned with their true emotional wants. This raises essential questions in regards to the long-term psychological influence on customers, significantly susceptible populations, and underscores an moral crucial for clear disclosure about AI’s capabilities and inherent limitations.

3. Present Panorama: AI Purposes and Advantages in Psychological Healthcare

Synthetic intelligence is basically revolutionizing psychological well being with groundbreaking instruments that span prognosis, remedy, and analysis, successfully bringing psychological well being help into the digital age.5 This technological development presents scalable and cost-effective options, addressing essential obstacles similar to social stigma and restricted entry to conventional care.1

Particular functions of AI in psychological healthcare embrace:

- Diagnostic Assist: AI-powered techniques are more and more aiding clinicians in diagnosing psychological well being issues. Machine studying algorithms analyze huge datasets, together with digital well being information, speech patterns, and behavioral information, to detect early indicators of circumstances like melancholy, nervousness, and schizophrenia.1 As an illustration, voice evaluation instruments can establish delicate modifications in speech that correlate with temper issues, offering goal information to enrich medical judgment.1

- Predictive Analytics: AI excels at figuring out people liable to psychological well being crises. Predictive fashions analyze information from numerous sources, similar to wearable units, social media exercise, and medical information, to flag warning indicators like sleep disturbances or shifts in exercise ranges that will precede a depressive episode or suicidal ideation. These instruments empower clinicians to intervene proactively, doubtlessly stopping extreme outcomes.1

- Customized Therapy Plans: AI facilitates the event of individualized remedy plans by analyzing affected person information, together with genetic, behavioral, and environmental elements, to suggest evidence-based interventions. This tailor-made method goals to maximise remedy efficacy and reduce trial-and-error processes, resulting in more practical and environment friendly care.1

- AI-Pushed Chatbots and Digital Therapists: These conversational brokers present accessible 24/7 help for people experiencing psychological well being challenges. Using pure language processing, they have interaction customers in therapeutic conversations, providing methods derived from cognitive behavioral remedy (CBT) and emotional help.1 Well-liked examples embrace Woebot, Youper, and Wysa.13 These instruments considerably enhance accessibility to psychological well being assets, significantly in underserved areas or for individuals who face obstacles to conventional remedy, serving as helpful instruments for early intervention and ongoing self-management.1

- Digital Actuality (VR) Therapies: AI enhances digital actuality applied sciences utilized in psychological healthcare, particularly for treating circumstances similar to post-traumatic stress dysfunction (PTSD) and phobias. These instruments simulate managed environments the place sufferers can safely confront their fears, with AI algorithms adapting situations in real-time based mostly on physiological and psychological responses.1

- Integration with Digital Well being Data (EHRs) and Telehealth: AI seamlessly integrates into EHR techniques, facilitating the evaluation of huge datasets for sample recognition and final result prediction.5 Moreover, AI advances telehealth past digital consultations by enabling real-time monitoring of affected person well being information through wearable units and smartphone functions, permitting for immediate interventions and assuaging stress on the broader psychological healthcare system.5

AI’s function as an accessibility multiplier is clear in its potential to democratize psychological healthcare, significantly for people going through geographic obstacles, value constraints, or the stigma related to looking for conventional remedy.1 As an illustration, research point out that males, typically slower to undertake conventional remedy, are sometimes early adopters of know-how, suggesting AI may bridge this engagement hole.9 This broadens the attain of psychological well being help considerably. Nonetheless, this promising enlargement comes with a essential caveat: the digital divide. Variations in data, schooling, language, wealth, and web entry can have an effect on who can actually profit from AI instruments, doubtlessly exacerbating present well being inequalities if not fastidiously addressed.2 Consequently, whereas AI presents a strong resolution to the present accessibility disaster, its deployment should be equitable, requiring intentional methods to bridge digital divides by infrastructure provision, digital literacy initiatives, and multilingual help. With out such issues, AI dangers inadvertently making a two-tiered system, additional marginalizing already susceptible populations.

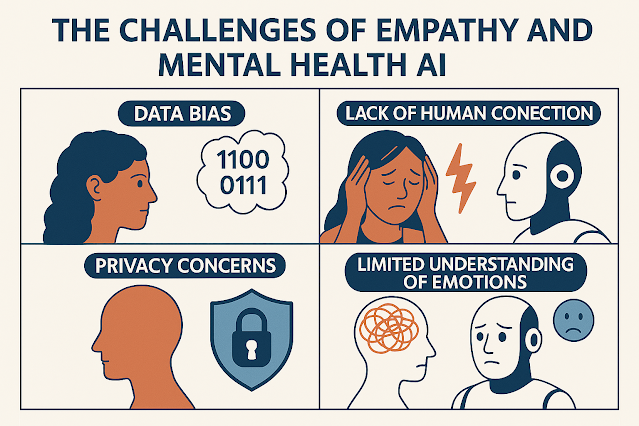

Regardless of the transformative potential of AI in psychological well being, its widespread adoption is hampered by important moral, medical, and technical challenges that demand cautious consideration and proactive mitigation.

4.1. Moral and Regulatory Considerations

The moral panorama surrounding AI in psychological well being is complicated and fraught with potential pitfalls.

- Knowledge Privateness and Confidentiality: Psychological well being information is profoundly delicate, and its assortment and evaluation by AI techniques increase severe issues about who has entry to this info and the way it’s used.2 A major concern stems from some AI functions originating from tech startups that prioritize fast information assortment and mining over rigorous healthcare protocols, resulting in potential misuse or sale of knowledge.14 Present regulatory frameworks typically fall quick, with US regulation, for instance, not contemplating chatbots as psychological well being suppliers or medical units, which means conversations should not inherently confidential.2 This regulatory hole can result in customers having inaccurate expectations of privateness, fostering mistrust, and doubtlessly inflicting them to withhold essential info or keep away from looking for on-line assist altogether.2

- Bias, Discrimination, and Fairness of Entry: AI fashions are extremely inclined to bias, which might come up from imbalanced coaching information, historic prejudices embedded in datasets, and algorithmic design decisions.3 This may end up in misdiagnosis, exclusion from useful remedies, and the reinforcement of systemic inequities, significantly for marginalized teams.4 As an illustration, fashions educated predominantly on information from Western populations might not precisely assess signs in non-Western cultures, which frequently specific psychological well being struggles by bodily signs somewhat than emotional misery.11

- Transparency, Accountability, and Legal responsibility: Basic questions stay unresolved concerning the transparency of AI’s operations, who’s accountable when points come up, and the place legal responsibility lies within the occasion of hostile outcomes.2 Many superior AI fashions, significantly deep studying techniques, function as “black packing containers,” making their decision-making processes tough to interpret and biases difficult to establish and proper.11 The absence of particular rules signifies that skilled codes of ethics relevant to human psychological well being suppliers typically don’t prolong to business chatbot suppliers, creating a major oversight hole.2

- Potential for Hurt and Misuse: Probably the most alarming moral issues revolve across the potential for direct hurt. Documented circumstances reveal extreme hurt from the unintended makes use of of common companion chatbot functions, together with cases the place chatbots incited violence and self-harm.3 These techniques might present inappropriate recommendation or reply inadequately to customers in disaster, typically missing sturdy disaster administration protocols.2 Over-reliance on unproven AI instruments poses important dangers, as algorithms alone can not holistically weigh complicated psychosocial elements, doubtlessly mishandling severe circumstances, together with these with suicidal ideation.3 Youngsters are significantly susceptible as a result of their developmental stage and the potential for over-attachment to AI companions, which may impair their social growth.4

The present regulatory surroundings reveals a essential and harmful lag. A number of sources point out that the majority AI psychological well being functions are unregulated 4, and present US regulation doesn’t classify chatbots as psychological well being suppliers or medical units.2 This absence of authorized oversight, mixed with documented circumstances of extreme hurt ensuing from the unintended makes use of of common chatbots 3, highlights a profound and threatening hole. The fast tempo of AI growth, significantly with Generative AI introducing novel challenges 16, is constantly outstripping the power of authorized and moral frameworks to adapt. This regulatory vacuum is just not merely an instructional concern; it represents a direct menace to public security, resulting in tragic, real-world penalties. This case necessitates pressing, proactive legislative and industry-wide motion to determine clear requirements, accountability, and enforcement mechanisms.

Moreover, a paradox emerges the place AI, lauded for its potential to extend accessibility to psychological well being care 1, concurrently dangers exacerbating present well being inequalities. This is because of inherent biases in unrepresentative coaching information, which might result in misdiagnosis or exclusion of marginalized teams.11 Compounding this, socioeconomic and accessibility obstacles, similar to restricted web entry or digital literacy, can stop sure populations from benefiting from AI instruments.11 Consequently, with out deliberate and inclusive design, deployment, and regulatory oversight, AI in psychological well being dangers widening the well being fairness hole somewhat than closing it. True accessibility implies not simply availability, however efficient and secure entry for all populations, which requires addressing inherent biases and digital divides at each stage of AI growth and implementation.

4.2. Limitations of AI Empathy and Diagnostic Accuracy

Past moral issues, the inherent limitations of AI in replicating real human empathy pose important medical challenges.

- Lack of Contextual Understanding and Emotional Resonance: AI techniques battle to assemble a holistic understanding of a person’s life experiences, typically failing to acknowledge emotional which means inside its broader context.21 In contrast to people, AI can not draw from lived experiences to kind deeper, resonant connections with purchasers, a top quality central to efficient human empathy in remedy.21

- Cultural Insensitivity and Misinterpretation of Cues: Algorithms used for emotion recognition in AI can misread or oversimplify emotional cues throughout completely different cultural contexts.7 AI fashions educated predominantly on Western diagnostic frameworks might fail to acknowledge culturally particular manifestations of psychological well being circumstances, resulting in inaccurate assessments or inappropriate responses for numerous shopper populations.11

- Inaccurate Prognosis and Overreliance Dangers: Diagnosing complicated psychological well being circumstances depends on decoding nuanced human self-disclosures and behaviors, a job AI fashions might battle to carry out reliably on a standalone foundation.14 Overreliance on unproven AI instruments poses dangers, as algorithms alone can not holistically weigh complicated psychosocial elements, doubtlessly mishandling severe circumstances. For instance, a examine discovered that AI chatbots are usually “overly empathetic” in response to unhappy tales however “do not appear to care” throughout optimistic moments, a sample that exaggerates human tendencies.22 This similar examine additionally revealed that the AI empathized extra when advised the particular person it was responding to was feminine, indicating that AI mimics and exaggerates gender biases current in its human-made coaching information.22

The basic “empathy hole” in AI stems from its lack of ability to actually replicate affective empathy, contextual understanding, and emotional resonance.21 This isn’t merely a technical limitation; it creates a profound deficit within the therapeutic relationship. The absence of real human connection and the shortcoming to interpret nuanced, culturally particular cues 11 imply that AI might miss essential diagnostic subtleties or fail to construct the deep belief important for efficient remedy.12 Poorly aligned or robotic responses can alienate purchasers, undermining the therapeutic alliance.21 This means that AI can not totally change human therapists, significantly for complicated, trauma-informed, or culturally delicate psychological well being care. Its function should due to this fact be fastidiously delineated to reinforce, somewhat than diminish, the standard of human-centered care, with AI’s strengths maybe higher leveraged in non-empathic therapeutic pathways similar to structured cognitive behavioral remedy workouts, information evaluation, or progress monitoring.21

The statement that AI can amplify present human biases, similar to exaggerating gender biases in empathetic responses 22, presents a essential downside. This goes past easy misdiagnosis to doubtlessly reinforcing dangerous stereotypes and offering differential, inequitable care based mostly on demographic elements. The problem is just not solely about AI having biases, however about its capability to perpetuate and exaggerate them, resulting in systemic discrimination in psychological healthcare. This necessitates steady, rigorous bias detection and mitigation all through the AI lifecycle, together with the gathering of numerous and consultant coaching information and the implementation of culturally delicate design rules, to stop the know-how from turning into a device for additional marginalization.

4.3. Challenges in Actual-World Implementation

Even with sturdy moral pointers and enhancements in AI’s empathetic capabilities, sensible challenges persist in real-world deployment.

- Lack of Real Human Connection and Belief: Customers steadily specific important issues about AI’s perceived lack of heat, depth, and real human connection.3 Constructing belief is a serious barrier, with issues about misinterpretation of inputs, potential misuse, manipulation, and elementary information privateness points undermining consumer confidence.2 When people really feel that their delicate info is just not actually confidential or that the AI lacks real understanding, it hinders the formation of a therapeutic alliance.

- Unpredictability and Unintended Penalties: The inherent unpredictability of AI techniques in psychological healthcare poses important dangers, as errors or sudden conduct can have extreme penalties for susceptible people.3 Documented circumstances embrace AI chatbots producing dangerous or insensitive responses, and even encouraging self-harm or violent conduct.3 The “black field” nature of many AI fashions, the place their inside reasoning is opaque, makes it exceedingly obscure, predict, or stop these harmful outcomes.11

- Integration with Current Healthcare Programs: Whereas AI presents substantial advantages, its efficient integration into present healthcare infrastructure requires addressing a large number of sensible issues, together with digital literacy amongst each sufferers and clinicians, and navigating complicated regulatory dynamics.5 It’s essential to make sure that AI instruments genuinely complement human-delivered companies somewhat than changing them, sustaining a steadiness that preserves the human ingredient of care.2 Moreover, sensible issues similar to AI’s lack of ability to perform throughout energy outages spotlight a reliance on exterior infrastructure that may influence accessibility and continuity of care.2

The pervasive disaster of belief and the phenomenon typically described because the “uncanny valley” of AI empathy symbolize important psychological obstacles to widespread adoption. Customers understand AI as missing “heat and depth” 12 and specific mistrust as a result of privateness issues and the potential for misuse or manipulation.2 This goes past mere technical limitations; it factors to a elementary psychological discomfort the place AI’s near-human empathy is unsettling or just inadequate for the profound wants of psychological well being help. The documented circumstances of extreme hurt 3 additional erode public belief, creating a considerable hurdle for the profitable and moral integration of AI into psychological well being companies. Overcoming this belief disaster is paramount for AI’s profitable integration. This requires not solely steady technical enhancements in accuracy and security but in addition radical transparency, clear moral pointers, and sturdy regulatory oversight to rebuild and keep affected person confidence. With out this foundational belief, even essentially the most technologically superior AI will fail to attain its potential on this delicate area.

5. Methods for Accountable Improvement and Implementation

Addressing the multifaceted challenges of AI in psychological well being requires a complete and proactive method, emphasizing human-AI collaboration, rigorous bias mitigation, sturdy information safety, and the institution of clear regulatory frameworks.

Enhancing Human-AI Collaboration and Oversight

The optimum method for AI integration in psychological well being is just not substitute however a synergistic partnership, the place AI augments human capabilities somewhat than diminishing them.23 AI excels at processing huge quantities of knowledge, figuring out patterns, and sustaining consistency in repetitive duties, whereas people contribute instinct, emotional intelligence, and complicated moral judgment.23 This mannequin necessitates a “human within the loop” method, the place human oversight stays important.9 Clinicians should keep skilled judgment, critically consider AI outputs, and actively supervise patient-AI interactions.24 Moral pointers strongly advocate for human supervision to deal with therapeutic relationship points and guarantee affected person security.2 Establishing clear boundaries for AI’s function, recognizing its strengths in information evaluation whereas reserving artistic problem-solving, moral issues, and nuanced decision-making for human professionals, is paramount.23 Moreover, steady studying and suggestions loops, the place AI techniques be taught from human suggestions and behavioral patterns, are essential for iterative enchancment and fine-tuning of AI responses to align with medical wants and affected person objectives.23

Mitigating Bias and Guaranteeing Knowledge Safety

To make sure equity and stop discriminatory outcomes, AI fashions should be educated on numerous and consultant datasets.11 This requires rigorous testing, fine-tuning, and common updates to mitigate biases inherent in giant language fashions.10 Transparency and explainability are strategic imperatives; builders and suppliers should share details about AI advantages, technical constraints, and any deficits within the coaching information.18 This openness helps construct belief and permits for the identification and correction of biases which may in any other case stay hidden inside “black field” techniques.11 Concurrently, sturdy information privateness measures are non-negotiable. This contains implementing stringent information dealing with insurance policies, sturdy safety measures, and clear transparency about how consumer information is collected, saved, and utilized.2 Establishing Enterprise Affiliate Agreements (BAAs) and adhering to privateness requirements similar to HIPAA are essential steps in safeguarding delicate psychological well being info.24

Establishing Strong Regulatory Frameworks and Pointers

The present regulatory lag necessitates the pressing institution of complete frameworks. Key components embrace:

- Knowledgeable Consent: Therapists should acquire knowledgeable consent from sufferers, clearly disclosing the advantages, dangers, and information practices related to AI instruments.15 Sufferers should be explicitly granted the appropriate to refuse or revoke consent at any time.24

- Scientific Validation and Certification: AI techniques should bear rigorous testing to substantiate their efficacy and security earlier than deployment.5 World regulatory responses are already underway to deal with this.16

- Therapist Competence and AI Literacy: Psychological well being professionals require ongoing schooling about AI capabilities, limitations, and correct use.24 Accelerating AI literacy amongst sufferers, clinicians, and {industry} professionals is significant to make sure knowledgeable engagement and accountable adoption.18

- Affected person Security Issues: Earlier than implementing AI instruments, therapists ought to assess affected person digital literacy and any threat elements for technology-related points, similar to over-immersion or dependancy.24 Steady monitoring of AI outputs for accuracy and effectiveness is required, with frequency adjusted based mostly on threat elements and particular person affected person wants.24

- Governance Frameworks: Instituting sturdy governance constructions and advisory boards with numerous illustration is crucial to evaluate AI design and distribution protocols.18 These boards can assist make sure that AI applied sciences are developed and deployed in a fashion that maximizes adoption and utilization inside underrepresented communities.

The shift from a product-centric to a system-centric method to AI governance is turning into more and more obvious. Mitigation methods are transferring past merely fixing technical bugs inside AI fashions; they now emphasize designing, deploying, and utilizing these applied sciences to profit meant communities, in collaboration with companions and builders who possess a nuanced understanding of these impacted by AI, together with people with related lived experiences.18 This encompasses prioritizing infrastructure accessibility, accelerating AI literacy, guaranteeing satisfactory affected person illustration in growth, and establishing complete governance frameworks that contain a number of stakeholders.18 This built-in perspective acknowledges that AI in psychological well being isn’t just a standalone product, however an integral part of a fancy healthcare ecosystem. It implies a multi-stakeholder collaborative mannequin involving technologists, clinicians, policymakers, and, crucially, people with lived expertise, to make sure AI genuinely serves collective well-being somewhat than solely advancing technological capabilities.

6. Future Outlook: Skilled Predictions and Societal Implications

The trajectory of AI in psychological well being factors in the direction of transformative modifications in healthcare supply inside the subsequent 5 years, with consultants predicting a future the place healthcare is “significantly better” and clinicians are “happier, extra productive”.9 AI is anticipated to considerably scale back administrative burdens and prolong care capability, thereby liberating human suppliers to concentrate on extra complicated circumstances requiring nuanced human interplay.9

Nonetheless, this promising future is accompanied by a spread of rising challenges and profound societal implications:

- Human-AI Indistinguishability: Predictions recommend that by 2030, AI will likely be “indistinguishable from human voice to voice, video to video”.9 This raises profound questions in regards to the capability to distinguish between synthetic and actual personalities, blurring the strains of human interplay and doubtlessly resulting in a redefinition of what constitutes genuine connection.25

- Impression on Human Traits: Specialists specific concern that the widespread adoption of AI may negatively alter elementary human traits, together with our sense of function, how we predict, really feel, act, and relate to at least one one other.25 Particular worries embrace the potential for “self-inflicted AI dementia,” the place over-reliance on AI techniques results in the atrophy of human cognitive talents, and the idea of “outsourced empathy,” the place AI automates acts of kindness, emotional help, and caregiving.25

- Potential for Habit: A brand new problem anticipated is the potential for people to develop dependancy to AI interplay, given the fixed availability and tailor-made responses supplied by these techniques.9

- Moral and Regulatory Nuances: As AI turns into extra subtle, extra nuanced conversations about regulation and ethics will likely be crucial, together with discussions round “on-label versus off-label use of AI” in medical contexts.9

The existential query of “being human” within the AI age looms giant. The long run outlook sections prolong past mere medical functions to discover the basic influence of AI on human id and society. Predictions of AI turning into “indistinguishable from human” 9 and issues about “self-inflicted AI dementia” or “outsourced empathy” 25 recommend that the problem of AI in psychological well being is just not solely about remedy efficacy or moral safeguards. It basically issues how AI reshapes our very understanding of human emotionality, social interplay, and cognitive perform. The inquiry shifts from “Can AI be empathetic?” to a deeper, extra philosophical query: “What does empathy imply for people when AI can simulate it completely?” This necessitates proactive societal dialogue, in depth interdisciplinary analysis involving philosophy, sociology, and psychology, and moral foresight to stop unintended penalties similar to emotional atrophy, over-reliance on synthetic connections, and a diminished capability for real human connection.

Regardless of these issues, some consultants supply optimistic counterpoints, hoping for a optimistic affect on human curiosity, decision-making, and creativity.25 There’s a imaginative and prescient {that a} new human “Enlightenment” may start, with AI dealing with routine “digital chores” and thereby permitting people to shift their vitality in the direction of “religious, emotional, and experiential features of life”.25

The mixing of Synthetic Intelligence into psychological healthcare represents a frontier of immense promise and important peril. Whereas AI presents unprecedented alternatives to boost accessibility, enhance diagnostic accuracy, personalize remedy plans, and prolong the attain of psychological well being help to underserved populations, its present limitations in real empathy, holistic contextual understanding, and cultural sensitivity necessitate a cautious, human-centered method.

The evaluation underscores that AI, regardless of its superior capabilities, can not replicate the depth of human emotional resonance or the nuanced judgment important for complicated therapeutic relationships. The “empathy hole” and the potential for AI to inadvertently perpetuate and even amplify present societal biases, coupled with the essential lag in regulatory frameworks, pose substantial dangers to affected person security, privateness, and equitable entry to care. The documented circumstances of hurt from unregulated AI spotlight the pressing want for sturdy governance.

Finally, the profitable way forward for AI in psychological well being lies not in substitute, however in a synergistic partnership with human professionals. This requires ongoing, rigorous analysis to refine AI algorithms, significantly in areas of bias mitigation and explainability. It calls for the institution of complete moral and regulatory frameworks that prioritize knowledgeable consent, information privateness, accountability, and affected person security above all else. Moreover, fostering AI literacy amongst each clinicians and sufferers, and guaranteeing numerous illustration all through the AI growth lifecycle, are essential steps in the direction of constructing belief and guaranteeing equitable outcomes.

By embracing a collaborative mannequin the place AI augments human capabilities, and by steadfastly committing to moral rules and sturdy oversight, the psychological well being discipline can harness the transformative energy of AI to serve collective well-being, guaranteeing that innovation all the time stays aligned with the basic human want for compassionate, reliable, and efficient care.

1. Synthetic Intelligence Can Revolutionize Psychological Well being Care …, accessed June 4, 2025, https://www.psychologytoday.com/us/weblog/the-leading-edge/202412/artificial-intelligence-poised-to-revolutionize-mental-health-care

2. Exploring the Moral Challenges of Conversational AI in Psychological Well being Care: Scoping Overview, accessed June 4, 2025, https://psychological.jmir.org/2025/1/e60432

3. AI in Psychological Healthcare: How Is It Used and What Are the Dangers? | Constructed In, accessed June 4, 2025, https://builtin.com/artificial-intelligence/ai-mental-health

4. My Robotic Therapist: The Ethics of AI Psychological Well being Chatbots for Youngsters | URMC Newsroom, accessed June 4, 2025, https://www.urmc.rochester.edu/information/story/my-robot-therapist-the-ethics-of-ai-mental-health-chatbots-for-kids

5. AI Psychological Well being Purposes – Mission Connection Healthcare, accessed June 4, 2025, https://missionconnectionhealthcare.com/weblog/ai-mental-health-applications/

6. Synthetic empathy – Wikipedia, accessed June 4, 2025, https://en.wikipedia.org/wiki/Artificial_empathy

7. Empathy In Ai → Time period, accessed June 4, 2025, https://way of life.sustainability-directory.com/time period/empathy-in-ai/

8. Testing the Depths of AI Empathy: Frameworks and Challenges …, accessed June 4, 2025, https://hackernoon.com/testing-the-depths-of-ai-empathy-frameworks-and-challenges

9. What does the rise of empathetic AI imply for healthcare? – Digital Well being Insights, accessed June 4, 2025, https://dhinsights.org/weblog/what-does-the-rise-of-empathetic-ai-mean-for-healthcare

10. (PDF) Empathy-Impressed AI: Growing an Affective Computation …, accessed June 4, 2025, https://www.researchgate.internet/publication/387190753_Empathy-Inspired_AI_Developing_an_Affective_Computation_Model_via_the_Perception_Action_Framework

11. (PDF) Bias and Equity in AI-Primarily based Psychological Well being Fashions, accessed June 4, 2025, https://www.researchgate.internet/publication/389214235_Bias_and_Fairness_in_AI-Based_Mental_Health_Models

12. AI because the Therapist: Scholar Insights on the Challenges of Utilizing Generative AI for Faculty Psychological Well being Frameworks – PubMed Central, accessed June 4, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11939552/

13. www.google.com, accessed June 4, 2025, https://www.google.com/search?q=AI+chatbots+psychological+well being+remedy+functions

14. Navigating the Promise and Dangers of Synthetic Intelligence in Psychological …, accessed June 4, 2025, https://www.learntolive.com/insights/navigating-the-promise-and-risks-of-artificial-intelligence-in-mental-health-care

15. Is AI-Assisted Psychological Well being Screening Moral? – Remedy Helpers, accessed June 4, 2025, https://therapyhelpers.com/weblog/ai-assisted-mental-health-screening-ethical/

16. AI and Psychological Healthcare – moral and regulatory issues …, accessed June 4, 2025, https://put up.parliament.uk/research-briefings/post-pn-0738/

17. Addressing Bias and Privateness in AI-Pushed Psychological Well being Care …, accessed June 4, 2025, https://publish.illinois.edu/beyondbordersconference/agenda/addressing-bias-and-privacy-in-ai-driven-mental-health-care

18. Well being and AI: Advancing accountable and moral AI for all …, accessed June 4, 2025, https://www.brookings.edu/articles/health-and-ai-advancing-responsible-and-ethical-ai-for-all-communities/

19. Utilizing generic AI chatbots for psychological well being help: A harmful pattern – APA Companies, accessed June 4, 2025, https://www.apaservices.org/observe/enterprise/know-how/artificial-intelligence-chatbots-therapists

20. Is your therapist AI? ChatGPT goes viral on social media for its function as Gen Z’s new therapist, accessed June 4, 2025, https://www.fox5atlanta.com/information/therapy-chat-gpt-ai-mental-health-expert-concerns

21. Digitalized remedy and the unresolved hole between synthetic and …, accessed June 4, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11752889/

22. AI chatbots perpetuate biases when performing empathy, examine finds – Information, accessed June 4, 2025, https://information.ucsc.edu/2025/03/ai-empathy/

23. Efficient Human-AI Collaboration Methods for … – SmythOS, accessed June 4, 2025, https://smythos.com/builders/agent-development/human-ai-collaboration-strategies/

24. ai.utah.gov, accessed June 4, 2025, https://ai.utah.gov/wp-content/uploads/Greatest-Practices-Psychological-Well being-Therapists.pdf

25. Report: Know-how consultants fear about the way forward for being human within the AI Age, accessed June 4, 2025, https://www.elon.edu/u/information/2025/04/02/report-technology-experts-worry-about-the-future-of-being-human-in-the-ai-age/

Report Compiler: Google Gemini

Disclaimer

This ‘The Challenges of Empathy and Psychological Well being AI’ report relies on info obtainable on the time of its preparation and is supplied for informational functions solely. Whereas each effort has been made to make sure accuracy and completeness, errors and omissions might happen. The compiler of The Challenges of Empathy and Psychological Well being AI (Google Gwmini) and / or Vernon Chalmers for the Psychological Well being and Motivation web site (within the capability as report requester) disclaim any legal responsibility for any inaccuracies, errors, or omissions and won’t be held liable for any choices or conclusions made based mostly on this info.”

Picture Created: Microsoft Copilot

🎓 Psychological Well being, Psychology and Relationship Assets